Stretch: The History of BYU's First Supercomputer

Edward Teller had a problem. The Hungarian-American physicist — the closest thing America has ever had to a genuine mad scientist — was working on the next generation of nuclear weapons, but had come to a point where the calculations were becoming too complex. The first nuclear weapons, the ones dropped over Japan, were relatively simple devices. They relied on a set of charges compressing fissile material and setting off an explosive chain reaction. Getting them to work was still a tricky bit of math, but the second generation device would be even more complex. Teller had realized that you could chain together multiple implosion devices indefinitely, combining the explosive power of many simple atom bombs into one complex weapon. The problem was getting the chain to fire.

Technical details about these devices, now known as thermonuclear or H-bombs, are closely protected secrets. To this day, outsiders can only guess at how they work, but the principle is more or less understood — in theory. When an H-bomb is lit off, the reaction starts with a simple implosion device. This goes critical and starts the fission reaction. Surrounding this first-stage device is some sort of material (we don’t know exactly what it is). This material reacts to the increasing heat, pressure, and radiation at the core of the bomb and turns into a superheated plasma. This plasma in turn triggers a second nuclear core, which explodes as well. The chain can go on forever. As far as physicists can tell, there is no theoretical limit to how powerful a H-bomb can get, only design limits.

That roiling nuclear plasma provides a calculation problem though. The dynamics of a superheated plasma are described by detailed fluid equations. These dynamics are some of the most complicated in physics, and they only get more mathematically intensive as you add more dimensions. To calculate the way a nuclear plasma would move inside a 3-dimensional bomb casing involved such an extensive set of partial differential equations that it quickly leapt beyond the bounds of human calculation capacity. They needed a computer to run the simulations at a speed amicable to the human lifespan.

And therein lay Teller’s problem: there was not a computer in the world fast enough to simulate the plasma. So he went about designing one, or more accurately; giving IBM the specifications for the machine he needed.

As IBM set about building this new computer they realized that they had to make a quantum leap forward in technology. To manage all the variables Teller needed, they would need to build a computer so fast that it would be considered a whole new category of machine: the supercomputer, IBM’s first.

To start, IBM completely reworked the hardware. Earlier computers had used vacuum tubes to relay information through its logical processes. They worked at the time, but for what IBM had in store they would be too slow and unreliable. Instead, IBM switched to the transistor. These little devices were made of a semiconductor material and could act as both a switch and an amplifier, building up the logic gates that make a computer work. The switch to transistors was such an advancement in computer technology that IBM gave the new 7030 the moniker “Stretch.” The name stuck.

The first Stretch was delivered to Los Alamos in 1961. A second machine was custom modified for the National Security Agency. This supercomputer was nicknamed “Harvest” and specialized in scanning cryptographic documents for keywords. Other weapon development clients showed interest in Stretch, leading IBM to consider an additional commercial run for private sector businesses.

Unfortunately, Stretch never lived up to its promise. The machine was much slower than advertised. Though it could run nine programs simultaneously, it was half as fast as Edward Teller and IBM had originally envisioned. Despite representing a leap forward in hardware, Stretch only held the title of world’s fastest computer for three years, when it was outpaced by a computer built by Control Data Corporation, the company that ultimately spawned the iconic Cray supercomputer line. Commercial contracts never materialized and the program was quietly wound down in favor of faster machines. Though the Stretch was never the success IBM envisioned, it was a key step in the development of supercomputers and gave the company the experience they needed to create more effective machines down the line.

The Stretch that ultimately ended up at Brigham Young University — becoming the university’s first supercomputer — started its life as one of the military contracts. It was built for the MITRE Corporation in Bedford, Massachusetts. This non-profit weapons development lab was spun off from MIT’s Lincoln Laboratories, themselves a cutting edge military research outfit.

While it’s not clear what MITRE was doing with their Stretch, it’s possible they were using it to support the Semi-Automatic Ground Environment program. SAGE, as it was more often known, was a marvel of hardware design and information processing. The system was built to unify the command and control capabilities of the defensive weapons dotting the United States in case the Cold War went hot.

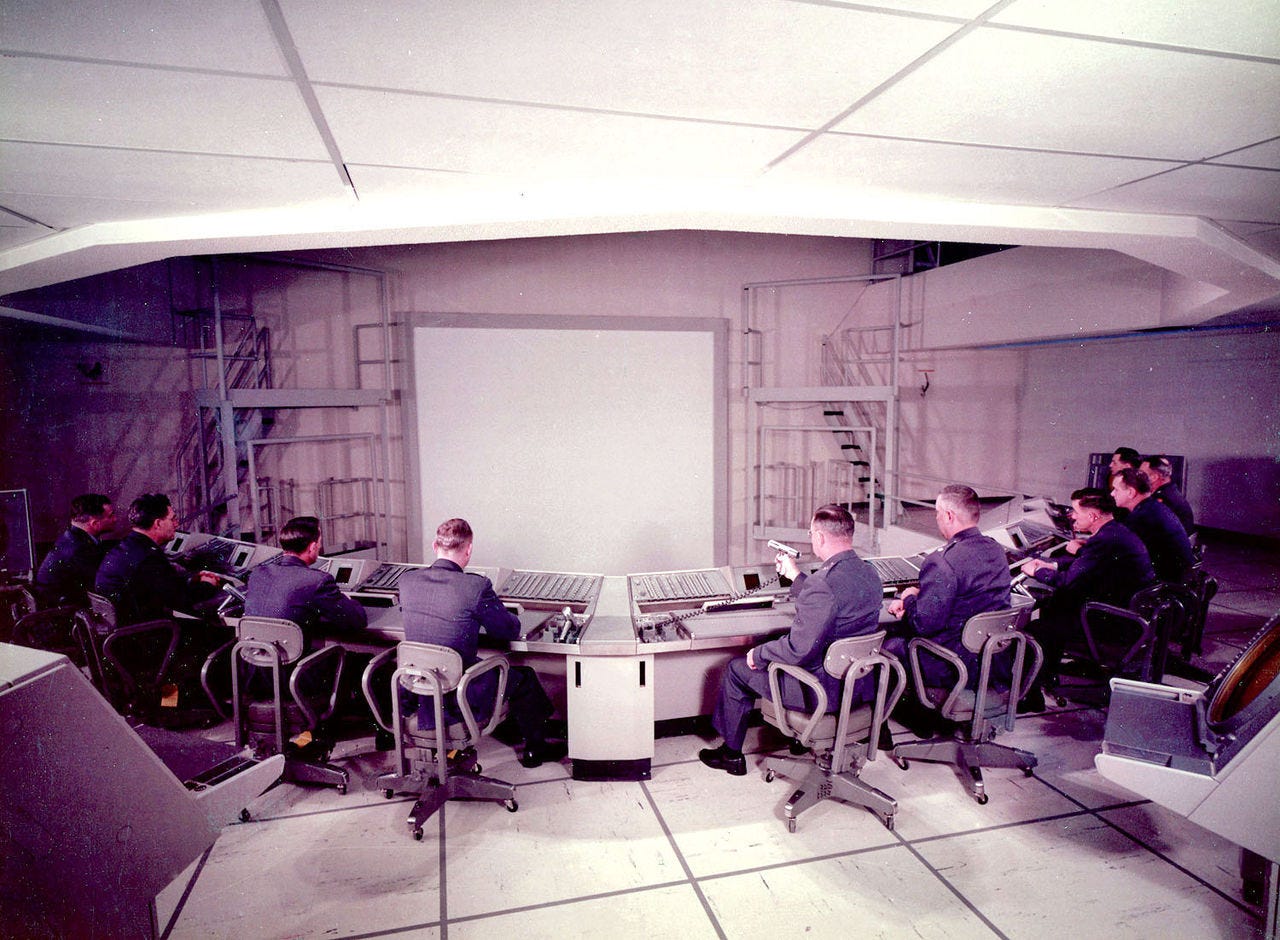

SAGE integrated real-time military information from all around the country. Massive computer node buildings were built in key locations. Each nondescript concrete building housed a massive supercomputer. The computer took up a whole floor with a footprint of 22,000 square feet. These lightning-fast IBM machines would take in information from fighter planes, surface-to-air missiles, and radar emplacements and use that data to build a real-time combat map to project on large display screens. Air Force officers would use these displays to coordinate defensive actions throughout the country.

In the case of a nuclear war, this allowed US commanders to manage the battlespace over the whole country. A general at McGuire Air Force Base in New York, for example, would be able to direct defensive operations over the whole New York sector in real-time. He could select emplacements to attack incoming targets, direct interceptors to bomber streams, view integrated radar data, etc. — all from one building. SAGE was so powerful that it could even feed data into a fighter plane and control the autopilot, automatically sending a pilot on an intercept course.

The most important feature of the system — for the modern world at least — was SAGE’s packet switching systems. In hindsight, they can be considered a very primitive version of the internet.

Near the end of Stretch's career with MITRE, a Mormon computer engineer named Dr. Gary Carlson — the man who would ultimately bring the computer to the Mountain West — was beginning his career within the information technology services of the church. Despite the traditionalist nature of the religion, the church was quick to see the benefits of computers and hired Dr. Carlson to implement a new system to track temple ceremonies.

The Mormon church teaches that there are a series of ordinances one must complete throughout one’s life in order to go to heaven. These ritualistic ordinances start at eight years old with baptism by immersion followed by a confirmation, mimicking John the Baptist’s ceremony with Jesus Christ. Once a young Mormon is baptized and confirmed, they are allowed to feel the presence of the Holy Ghost and are considered an official member of the church. By nature of being born to Mormon families, a child will get a membership record that the church tracks, but a baptism sets a child on the path for the rest of the blessings and ordinances in the church.

The next most important ordinances and performed in the temple, those secretive gleaming white buildings dotting the United States. Once a member turns eighteen they are eligible for these ordinances. First up is the initiatory. In this ceremony a member is given permission to wear the garments, the famous underwear set all temple-worthy members must wear. The member is also ceremoniously ordained with olive oil and washed with a drop of water.

Next up is the endowment, usually performed the same day. This ceremony has members dress in special robes and teaches them the handshakes, symbols, and secret passwords that will allow them to pass by the guardian angels protecting God’s realm and live in eternal splendor. Finally, there is the sealing, the Mormon temple wedding ceremony. When two endowed members marry they perform a ceremony where they dress in their temple robes and exchange handshakes across an altar. This is considered the highest ordinance, and binds the marriage together for all eternity.

However, the church is not content to have these ceremonies given only to living members. They are, after all, considered a requirement for entering heaven. To give all human beings the opportunity to live with God, the church performs these ordinances vicariously for the dead. The names of the dead are found by members scanning genealogical records and submitting names found in them to the temple.

Before the 60s these vicarious ceremonies were performed in the order above. But that meant bottlenecks occurred, which is most likely what necessitated the use of computers. Doing baptisms for the dead was relatively easy: standard practice is to have a person get baptized vicariously for a dozen people in one go. Often considered an activity for teenagers, they’ll stand in the temple baptismal font and get dunked under the water over and over again. It’s kind of like waterboarding.

On the other hand, endowments take a long time. The ceremony itself is around two hours long, and a member can only perform a vicarious endowment for one person at a time. Doing one baptism takes thirty seconds, doing one endowment takes hours.

The church’s solution to this ever-growing problem was to allow the ceremonies to be performed out of order. Even if the causal relations were required on Earth — one ceremony following the other – presumably in the timeless eternity God has less need for such strict temporal ordering. To perform the ordinances out of order, they would need a better tracking system than paper records. That’s where Dr. Carlson came in. He designed a computer database system for tracking vicarious ordinances, revolutionizing the way Mormon temple work was done.

The work paid off, because in 1963 Dr. Carlson was hired by Brigham Young University as the head of the Computer Research Center. Over the next few years the department was moved to different buildings on campus. Each time the Center moved it got more floor space, letting Dr. Carlson purchase and install new computer equipment. By the end of the decade, the Center had become big enough that it was reorganized into two new departments: the logistical Computer Services department and the academic Computer Science Department, currently part of the College of Computational, Mathematical, and Physical Sciences.

The slow accumulation of hardware had made BYU one of the preeminent computer centers in the Western United States, but they had yet to acquire a supercomputer. And no computer science department was complete without a high-powered machine.

Dr. Gary Carlson got to work, but faced an uphill battle justifying the purchase to university leadership. The department’s other IBM machines were modernizing the way the campus ran, but it seemed like a computer dedicated solely to research was a step too far. Top-of-the-line machines at the time were running from $3 - $4 million, so Dr. Carlson needed to find something cheaper.

At that time MITRE was decommissioning its Stretch. Like its sister machines, the MITRE Stretch had been rapidly outclassed. MITRE had only used it for a few years and were now looking to offload it. Somehow, MITRE and Dr. Carlson got in contact. MITRE agreed to sell their Stretch for only $50,000, a massive bargain. The price was right for the university. The Boston company started packing up the cabinet sized hardware and sending it out West. Because Stretches were no longer being built by IBM, Dr. Carlson also struck a deal with Los Alamos to cannibalize their computer — the original Teller machine — for spare parts. Within 14 months Stretch was in operation. Combining acquisition costs and installments fees, the university had only spent $165,000.

While Stretch wasn’t that impressive at the time, having been outdated for about a decade, it gave the nascent Computer Science Department the experience it needed in high-powered computing. Soon the university invested in a spin-off computer science research facility, the Eyring Research Institute, which almost immediately started picking up military contracts from nearby Hill Air Force Base.

Stretch and the ERI ended up being a good investment for the university. The ERI started raking in millions of dollars in profits — enough money that the Utah Supreme Court stripped it of its tax exempt status. The Computer Science Department rapidly expanded, as did the Mormon church’s investment in information technology. BYU’s Stretch was dismantled in the early 80s, by which point it had been far surpassed by the computers of its day. Still, the humble Stretch was the sign of things to come for Utah. The Wasatch Front is now a hub for software companies and high-powered computing, ranging from relatively prosaic companies like Adobe to the eminently spooky NSA espionage facility in Saratoga Springs. For a state that seems perennial stuck in the past when it comes to social issues, the money in Utah has its eyes on the future.